As you might know, due to invasive changes in PackageKit, I am currently rewriting the 3rd-party application installer Listaller. Since I am not the only one looking at the 3rd-party app-installation issue (there is a larger effort going on at GNOME, based on Lennarts ideas), it makes sense to redesign some concepts of Listaller.

Currently, dependencies and applications are installed into directories in /opt, and Listaller contains some logic to make applications find dependencies, and to talk to the package manager to install missing things. This has some drawbacks, like the need to install an application before using it, the need for applications to be relocatable, and application-installations being non-atomic.

Glick2

There is/was another 3rd-party app installer approach on the GNOME side, by Alexander Larsson, called Glick2. Glick uses application bundles (do you remember Klik from back in the days?) mounted via FUSE. This allows some neat features, like atomic installations and software upgrades, no need for relocatable apps and no need to install the application.

However, it also has disadvantages. Quoting the introduction document for Glick2:

“Bundling isn’t perfect, there are some well known disadvantages. Increased disk footprint is one, although current storage space size makes this not such a big issues. Another problem is with security (or bugfix) updates in bundled libraries. With bundled libraries its much harder to upgrade a single library, as you need to find and upgrade each app that uses it. Better tooling and upgrader support can lessen the impact of this, but not completely eliminate it.”

This is what Listaller does better, since it was designed to do a large effort to avoid duplication of code.

Also, currently Glick doesn’t have support for updates and software-repositories, which Listaller had.

Combining Listaller and Glick ideas

So, why not combine the ideas of Listaller and Glick? In order to have Glick share resources, the system needs to know which shared resources are available. This is not possible if there is one huge Glick bundle containing all of the application’s dependencies. So I modularized Glick bundles to contain just one software component, which is e.g. GTK+ or Qt, GStreamer or could even be a larger framework (e.g. “GNOME 3.14 Platform”). These components are identified using AppStream XML metadata, which allows them to be installed from the distributor’s software repositories as well, if that is wanted.

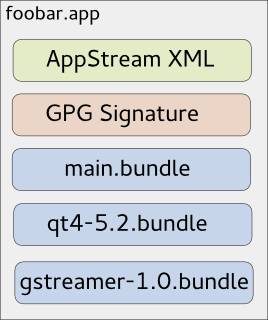

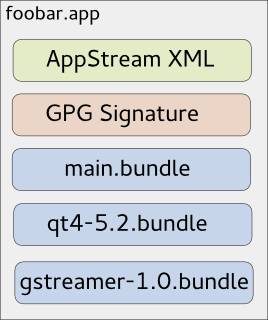

If you now want to deploy your application, you first create a Glick bundle for it. Then, in a second step, you bundle your application bundle with it’s dependencies in one larger tarball, which can also be GPG signed and can contain additional metadata.

The resulting “metabundle” will look like this:

This doesn’t look like we share resources yet, right? The dependencies are still bundled with the application requiring them. The trick lies in the “installation” step: While the application above can be executed right away without installing it, there will also be an option to install it. For the user, this will mean that the application shows up in GNOME-Shell’s overview or KDEs Plasma launcher, gets properly registered with mimetypes and is – if installed for all users – available system-wide.

Technically, this will mean that the application’s main bundle is extracted and moved to a special location on the file system, so are the dependency-bundles. If bundles already exist, they will not be installed again, and the new application will simply use the existing software. Since the bundles contain information about their dependencies, the system is able to determine which software is needed and which can simply be deleted from the installation directories.

If the application is started now, the bundles are combined and mounted, so the application can see the libraries it depends on.

Additionally, this concept allows secure updates of applications and shared resources. The bundle metadata contains an URL which points to a bundle repository. If new versions are released, the system’s auto-updater can automatically pick these up and install them – this means e.g. the Qt bundle will receive security updates, even if the developer who shipped it with his/her app didn’t think of updating it.

Conclusion

So far, no productive code exists for this – I just have a proof-of-concept here. But I pretty much like the idea, and I am thinking about going further in that direction, since it allows deploying applications on the Linux desktop as well as deploying software on servers in a way which plays nice with the native package manager, and which does not duplicate much code (less risk of having not-updated libraries with security flaws around).

However, there might be issues I haven’t thought about yet. Also, it makes sense to look at GNOME to see how the whole “3rd-party app deployment” issue develops. In case I go further with Listaller-NEXT, it is highly likely that it will make use of the ideas sketched above (comments and feedback are more than welcome!).

nightmare

bundles no-no

if you don’t break security, you break dependency chain of tested code.

lets go fixing all things broken by systemd, starting by the community, the knowledge base, the corner cases and the user base.

time remaining? lets help current infrastructure to work, before breaking it more.

Hello Matthias,

I really like the idea and had some related thoughts as well

Some questions:

1) When installed libraries may be updated, but updated (even only security updates) libraries may break existing applications.

I think it would be neat to have an automatic security update for the libraries but keep the old one until every application that used it was executed once and after execution to show a dialog (like did the program run successfully) or maybe right click on the program and under properties be able to choose which libraries to use. This would require integration into the file managers though…

2) What would be the “core os” not contained in bundles?

Kernel + X/Wayland?

Kernel + systemd + wayland + desktop environment?

3) How would you handle plugins?

Some programs may need to put plugin files directly to the installation directory instead putting them in $HOME/.myprog/plugins

For a system-wide and not user specific installed plugin it anyways need to be installed into the installation directory.

4) How will inter-process communication take place?

Will dbus work properly?

If software vendors will support bundle with all dependencies and without dependencies, it would be great. Also look at yast one click install. Tarball can contains repository URL, but packages from this repositories will not be installed globally (it would be installed per application). You can also provide sandbox for application.